linux创建软raid

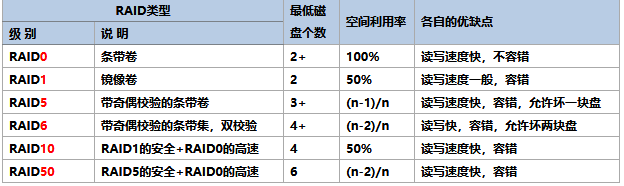

raid常见的几种类型

mdadm命令常见参数

raid0创建

[root@localhost ~]# mdadm -C -v /dev/md1 -l 0 -n 2 /dev/sdb /dev/sdc mdadm: chunk size defaults to 512K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md1 started.

查看阵列信息 [root@localhost ~]# mdadm -Ds ARRAY /dev/md1 metadata=1.2 name=localhost.localdomain:1 UUID=8a35bcc3:9daa9c26:991ca1a6:adb567f3

[root@localhost ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Wed Sep 11 22:41:59 2019

Raid Level : raid0

Array Size : 41910272 (39.97 GiB 42.92 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Wed Sep 11 22:41:59 2019 State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K Name : localhost.localdomain:1 (local to host localhost.localdomain) UUID : 8a35bcc3:9daa9c26:991ca1a6:adb567f3 Events : 0 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc

[root@localhost ~]# mdadm -Dsv

ARRAY /dev/md1 level=raid0 num-devices=2 metadata=1.2 name=localhost.localdomain:1 UUID=8a35bcc3:9daa9c26:991ca1a6:adb567f3

devices=/dev/sdb,/dev/sdc

分区挂载后查看容量

[root@localhost ~]# df -hT|grep md1p1 /dev/md1p1 xfs 40G 33M 40G 1% /md1

raid1创建

[root@localhost ~]# mdadm -C -v /dev/md2 -l 1 -n 2 -x 1 /dev/sd[d,e,f]

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 20955136K

Continue creating array?

Continue creating array? (y/n)

Continue creating array? (y/n) y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md2 started.

将raid1信息保存到配置文件

[root@localhost ~]# mdadm -Dsv >/etc/mdadm.conf

查看raid阵列信息

[root@localhost ~]# mdadm -D /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Wed Sep 11 22:58:10 2019

Raid Level : raid1

Array Size : 20955136 (19.98 GiB 21.46 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Wed Sep 11 22:59:55 2019 State : clean

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Name : localhost.localdomain:2 (local to host localhost.localdomain) UUID : a3387788:b83edf7a:d3199d6a:15732f5d Events : 17 Number Major Minor RaidDevice State 0 8 48 0 active sync /dev/sdd 1 8 64 1 active sync /dev/sde 2 8 80 - spare /dev/sdf

创建分区

[root@localhost ~]# mkdir /raid1

[root@localhost ~]# mkfs.xfs /dev/md2

meta-data=/dev/md2 isize=256 agcount=4, agsize=1309696 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=5238784, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@localhost ~]# mount /dev/md2 /raid1

[root@localhost ~]# df -hT|grep md2

/dev/md2 xfs 20G 33M 20G 1% /raid1

测试raid1

[root@localhost ~]# touch /raid1/aa.txt

[root@localhost ~]# ls /raid1/

aa.txt

[root@localhost ~]# mdadm -r /dev/md2 /dev/sdf

mdadm: hot removed /dev/sdf from /dev/md2

[root@localhost ~]# mdadm -D /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Wed Sep 11 22:58:10 2019

Raid Level : raid1

Array Size : 20955136 (19.98 GiB 21.46 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Wed Sep 11 23:08:52 2019 State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Name : localhost.localdomain:2 (local to host localhost.localdomain) UUID : a3387788:b83edf7a:d3199d6a:15732f5d Events : 18 Number Major Minor RaidDevice State 0 8 48 0 active sync /dev/sdd 1 8 64 1 active sync /dev/sde

[root@localhost ~]# ll /raid1/

总用量 0

-rw-r--r--. 1 root root 0 9月 11 23:07 aa.txt

raid5创建

[root@localhost ~]# mdadm -C -v /dev/md5 -l 5 -n 3 -x 1 -c32 /dev/sd{g,h,i,j}

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: size set to 20955136K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Wed Sep 11 23:14:20 2019

Raid Level : raid5

Array Size : 41910272 (39.97 GiB 42.92 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Sep 11 23:14:34 2019 State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric Chunk Size : 32K

Rebuild Status : 13% complete

Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 745d55ca:b0865062:ef4778a4:11ad4880 Events : 3 Number Major Minor RaidDevice State 0 8 96 0 active sync /dev/sdg 1 8 112 1 active sync /dev/sdh 4 8 128 2 spare rebuilding /dev/sdi 3 8 144 - spare /dev/sdj

停止MD5阵列

[root@localhost ~]# mdadm -Dsv >/etc/mdadm.conf

[root@localhost ~]# mdadm -S /dev/md5

mdadm: stopped /dev/md5[root@localhost ~]# mdadm -S /dev/md5

mdadm: stopped /dev/md5

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version :

Raid Level : raid0

Total Devices : 0

State : inactive Number Major Minor RaidDevice

激活MD5阵列

[root@localhost ~]# mdadm -As

mdadm: /dev/md5 has been started with 3 drives and 1 spare.

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Wed Sep 11 23:14:20 2019

Raid Level : raid5

Array Size : 41910272 (39.97 GiB 42.92 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Sep 11 23:16:06 2019 State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric Chunk Size : 32K Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 745d55ca:b0865062:ef4778a4:11ad4880 Events : 18 Number Major Minor RaidDevice State 0 8 96 0 active sync /dev/sdg 1 8 112 1 active sync /dev/sdh 4 8 128 2 active sync /dev/sdi 3 8 144 - spare /dev/sdj

扩展MD5阵列

[root@localhost ~]# mdadm -G /dev/md5 -n 4 -c 32

[root@localhost ~]# mdadm -Dsv >/etc/mdadm.conf

[root@localhost ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Wed Sep 11 23:14:20 2019

Raid Level : raid5

Array Size : 41910272 (39.97 GiB 42.92 GB)

Used Dev Size : 20955136 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Sep 11 23:21:58 2019 State : clean, reshaping

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric Chunk Size : 32K

Reshape Status : 5% complete //等到100%

Delta Devices : 1, (3->4)

Name : localhost.localdomain:5 (local to host localhost.localdomain) UUID : 745d55ca:b0865062:ef4778a4:11ad4880 Events : 46 Number Major Minor RaidDevice State 0 8 96 0 active sync /dev/sdg 1 8 112 1 active sync /dev/sdh 4 8 128 2 active sync /dev/sdi 3 8 144 3 active sync /dev/sdj

[root@localhost ~]#

raid5只能增加磁盘,不能减少磁盘

raid10创建

[root@localhost ~]# fdisk -l|grep sdk Disk /dev/sdk: 21.5 GB, 21474836480 bytes /dev/sdk1 1 132 1060258+ 83 Linux /dev/sdk2 133 264 1060290 83 Linux /dev/sdk3 265 396 1060290 83 Linux /dev/sdk4 397 528 1060290 83 Linux

[root@localhost ~]# mdadm -C -v /dev/md10 -l 10 -n 4 /dev/sdk[1-4]

mdadm: layout defaults to n2

mdadm: layout defaults to n2

mdadm: chunk size defaults to 512K

mdadm: size set to 1058816K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md10 : active raid10 sdk4[3] sdk3[2] sdk2[1] sdk1[0]

2117632 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU]

[===========>………] resync = 56.6% (1200000/2117632) finish=0.0min speed=240000K/sec

[root@localhost ~]# mdadm -Dsv /dev/md10

ARRAY /dev/md10 level=raid10 num-devices=4 metadata=1.2 name=localhost.localdomain:10 UUID=0d613d8b:3eef99ac:6eb16db6:1231d1ee

devices=/dev/sdk1,/dev/sdk2,/dev/sdk3,/dev/sdk4

删除raid

[root@localhost ~]# umount /dev/md0 /raid0 #如果你已经挂载raid,就先卸载。 [root@ localhost ~]# mdadm -Ss #停止raid设备 [root@ localhost ~]# rm -rf /etc/mdadm.conf #删除raid配置文件 [root@ localhost ~]# mdadm --zero-superblock /dev/sdb #清除物理磁盘中的raid标识 [root@ localhost ~]# mdadm --zero-superblock /dev/sdc #清除物理磁盘中的raid标识 参数:--zero-superblock : erase the MD superblock from a device. #擦除设备中的MD超级块

声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。