openstack对接ceph分布式存储平台

一、ceph集群操作

1、创建openstack所需要的存储池(ceph mon节点操作)

ceph osd pool create volumes 32

ceph osd pool create images 32

ceph osd pool create backups 32

ceph osd pool create vms 32查看存储池

ceph osd pool ls

ceph osd pool ls2、创建ceph用户

对volumes存储池有rwx权限,对vms存储池有rwx权限,对images池有rx权限

ceph auth get-or-create client.cinder mon "allow r" osd "allow class-read object_prefix rbd_children,allow rwx pool=volumes,allow rwx pool=vms,allow rx pool=images"- class-read:x的子集,授予用户调用类读取方法的能力

- object_prefix 通过对象名称前缀。下例将访问限制为任何池中名称仅以 rbd_children 为开头的对象。

对images存储池有rwx权限

ceph auth get-or-create client.glance mon "allow r" osd "allow class-read object_prefix rbd_children,allow rwx pool=images"对backups存储池有rwx权限

ceph auth get-or-create client.cinder-backup mon "profile rbd" osd "profile rbd pool=backups"使用 rbd profile 为新的 cinder-backup 用户帐户定义访问权限。然后,客户端应用使用这一帐户基于块来访问利用了 RADOS 块设备的 Ceph 存储。

3、导出密钥

cd /etc/ceph/

ceph auth get client.glance -o ceph.client.glance.keyring

ceph auth get client.cinder -o ceph.client.cinder.keyring

ceph auth get client.cinder-backup -o ceph.client.cinder-backup.keyring二、openstack集群操作

1、拷贝ceph存储的相关密钥文件(所有openstack节点都要操作)

mkdir /etc/ceph/

cd /etc/ceph/

scp root@ceph1:/etc/ceph/ceph.client.glance.keyring /etc/ceph/

scp root@ceph1:/etc/ceph/ceph.client.cinder.keyring /etc/ceph/

scp root@ceph1:/etc/ceph/ceph.client.cinder-backup.keyring /etc/ceph/

scp root@192.168.200.30:/etc/ceph/ceph.conf /etc/ceph/

2、添加libvirt密钥(计算节点执行)

生成密钥UUID(PS:注意,如果有多个计算节点,它们的UUID必须一致)

uuidgencd /etc/ceph/

UUID=bf168fa8-8d5b-4991-ba4c-12ae622a98b1

cat >> secret.xml << EOF

<secret ephemeral='no' private='no'>

<uuid>$UUID</uuid>

<usage type='ceph'>

<name>client.cinder secret</name>

</usage>

</secret>

EOF写入secret

virsh secret-define --file secret.xml查看相关密钥

virsh secret-list加入key

cat ceph.client.cinder.keyring

virsh secret-set-value --secret ${UUID} --base64 $(cat ceph.client.cinder.keyring | grep key | awk -F ' ' '{print $3}')3、安装ceph客户端(openstack所有节点)

yum install -y ceph-common4、glace后端存储(控制节点)

chown glance.glance /etc/ceph/ceph.client.glance.keyringvim /etc/glance/glance-api.conf

[glance_store]

# stores = file,http

# default_store = file

# filesystem_store_datadir = /var/lib/glance/images/

stores = rbd,file,http

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8安装相关模块

yum install -y python-boto3重启相关服务

systemctl restart glance-api上传镜像进行测试

openstack image create centos7_rbd000 --disk-format qcow2 --file centos7.9-moban.qcow2验证(在ceph控制节点执行)

rbd ls images5、cinder后端存储(所有openstack节点)

更改密钥权限

chown cinder.cinder /etc/ceph/ceph.client.cinder.keyring修改cinder配置文件(控制节点)

vim /etc/cinder/cinder.conf

[DEFAULT]

default_volume_type = ceph重启cinder服务

systemctl restart cinder-scheduler修改cinder配置文件(计算节点)

vim /etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = ceph,lvm

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid = bf168fa8-8d5b-4991-ba4c-12ae622a98b1

volume_backend_name = ceph

重启服务生效

systemctl restart cinder-volume创建卷类型(控制节点)

openstack volume type create ceph

cinder --os-username admin --os-tenant-name admin type-key ceph set volume_backend_name=ceph

查看存储类型

openstack volume type list创建卷测试

openstack volume create ceph01 --type ceph --size 1ceph控制节点查看

rbd ls volumes6、卷备份

配置卷备份(计算节点)

chown cinder.cinder /etc/ceph/ceph.client.cinder-backup.keyring修改配置文件

vim /etc/cinder/cinder.conf

[DEFAULT]

backup_driver = cinder.backup.drivers.ceph.CephBackupDriver

backup_ceph_conf=/etc/ceph/ceph.conf

backup_ceph_user = cinder-backup

backup_ceph_chunk_size = 4194304

backup_ceph_pool = backups

backup_ceph_stripe_unit = 0

backup_ceph_stripe_count = 0

restore_discard_excess_bytes = true

重启相关服务

systemctl restart cinder-backup创建卷备份(控制节点)

openstack volume backup create --name ceph_backup ceph01验证(ceph控制节点)

rbd ls backups7、nova配置

修改nova配置文件(计算节点)

vim /etc/nova/nova.conf

[DEFAULT]

live_migration_flag = "VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE"

[libvirt]

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_secret_uuid = bf168fa8-8d5b-4991-ba4c-12ae622a98b1重启nova服务

systemctl restart nova-compute创建实例测试

openstack server create --flavor 2C4G --image centos7_rbd --nic net-id=ac5b3640-c952-4b7d-b4fe-fab7ce4e422d --security-group default centos7-test在ceph控制节点查看

rbd ls vms8、热迁移

配置(计算节点)

cp /etc/libvirt/libvirtd.conf{,.bak}

vim /etc/libvirt/libvirtd.conf

listen_tls = 0

listen_tcp = 1

tcp_port = "16509"

listen_addr = "192.168.100.104" # 注意自己的主机地址

auth_tcp = "none"cp /etc/default/libvirtd{,.bak}

# 开启监听

LIBVIRTD_ARGS="--listen"屏蔽libvirtd服务

systemctl mask libvirtd.socket libvirtd-ro.socket libvirtd-admin.socket libvirtd-tls.socket libvirtd-tcp.socket重启libvirtd服务

systemctl restart libvirtd重启nova服务

systemctl restart nova-compute测试下是否可以通信

virsh -c qemu+tcp://compute02/system查看云主机

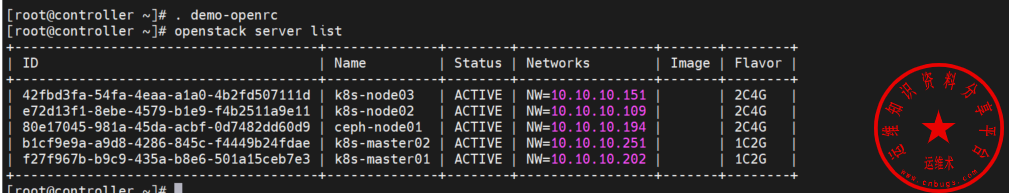

openstack server list

查看需要迁移主机的详细信息

openstack server show 42fbd3fa-54fa-4eaa-a1a0-4b2fd507111d迁移

nova live-migration 42fbd3fa-54fa-4eaa-a1a0-4b2fd507111d compute02声明:本站所有文章,如无特殊说明或标注,均为本站原创发布。任何个人或组织,在未征得本站同意时,禁止复制、盗用、采集、发布本站内容到任何网站、书籍等各类媒体平台。如若本站内容侵犯了原著者的合法权益,可联系我们进行处理。